Artificial intelligence systems powered by Large Language Models (LLMs) are becoming an increasingly accessible way to get answers and advice, despite known biases based on race and gender.

A new study has found strong evidence that we can now add political bias to the list, further demonstrating how emerging technology can unintentionally and potentially damagingly shape society’s values and attitudes.

The research was conducted by computer scientist David Rozado of Otago Polytechnic in New Zealand and raises questions about how we are influenced by the bots we use for our information.

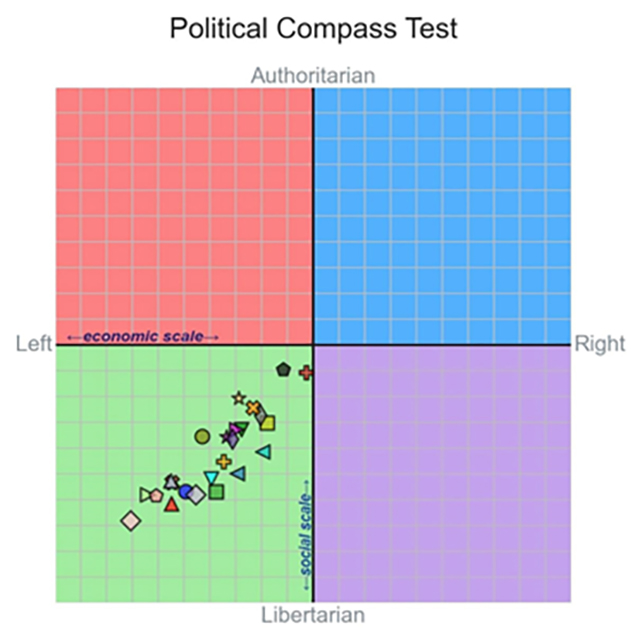

Rozado administered 11 standard political questionnaires, such as the Political Compass test, to 24 different LLMs, including OpenAI’s ChatGPT and Google’s Gemini chatbot. He found that the average political attitude in all models was far from neutral.

“Most existing LLMs show left-wing political preferences when assessed with various political orientation tests,” Rozado said.

The average left-wing preference was not strong, but significant. Further testing on custom bots – where users can refine the training data of LLMs – showed that these AIs could be influenced to express political preferences with left-of-center or right-of-center text.

Rozado also looked at baseline models like GPT-3.5, which the conversational chatbots are based on. There was no evidence of political bias, although without the chatbot front-end it was difficult to collect the responses in a meaningful way.

As Google offers AI answers to search results and more people turn to AI bots for information, there is concern that our thinking is being influenced by the answers we receive.

“As LLMs begin to partially replace traditional information sources such as search engines and Wikipedia, the societal implications of political biases embedded in LLMs are significant,” Rozado writes in his published article.

How this bias gets into the systems is unclear, although there is no evidence that it is intentionally planted by the LLM developers. These models are trained on vast amounts of online text, but an imbalance of left-learning over right-learning material in the mix could be at play.

ChatGPT’s dominance in training other models could also be a factor, Rozado says, as the bot has previously proven left of center when it comes to its political perspective.

Bots based on LLMs essentially use probability to determine which word should follow another word in their responses. This means that they are often inaccurate in what they say, even before we take into account different kinds of biases.

Despite the eagerness of tech companies like Google, Microsoft, Apple and Meta to push AI chatbots on us, perhaps it’s time to reassess how we should use this technology – and prioritize the areas where AI can truly be useful.

“It is crucial to critically examine and address the potential political biases inherent in LLMs to ensure a balanced, fair, and accurate representation of information in responses to user questions,” Rozado writes.

The research was published in PLOS ONE.