Apple Vision Pro’s eye-tracking technology offers a new way to interact with typing, but hackers are already abusing it to steal sensitive information. Here’s what you need to know to protect your data.

New technologies always bring new vulnerabilities. One such vulnerability, GAZEploit, exposes users to potential privacy breaches in Apple Vision Pro FaceTime calls.

GAZEploit, developed by researchers from the University of Florida, CertiK Skyfall Team, and Texas Tech University, uses eye tracking data in virtual reality to guess what a user is typing.

When users put on a virtual or mixed reality device, such as the Apple Vision Pro, they can type by looking at keys on a virtual keyboard. Instead of pressing physical buttons, the device tracks eye movements to determine the letters or numbers selected.

Overview of the attack

The virtual keyboard is where GAZEploit comes in. It analyzes eye movement data and guesses what the user is typing.

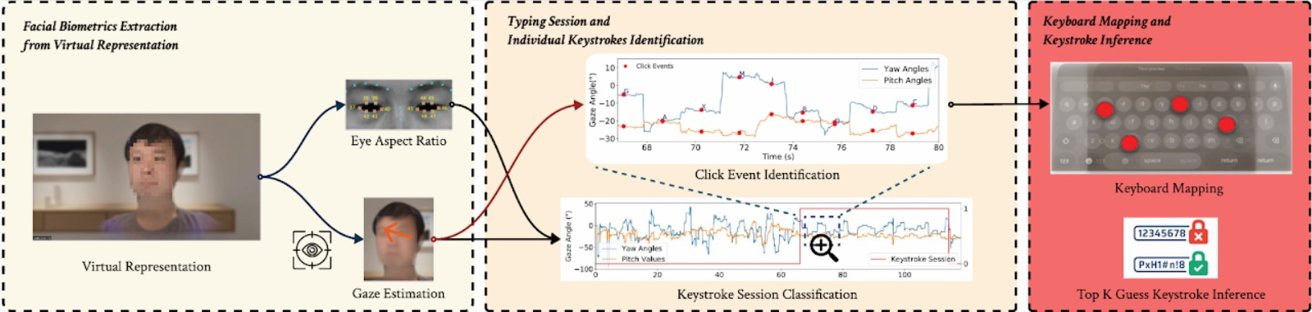

GAZEploit works by recording the eye movements of the user’s virtual avatar. It focuses on eye ratio (EAR), which measures how wide a person’s eyes are open, and eye gaze estimation, which tracks exactly where they’re looking on the screen.

By analyzing these factors, hackers can determine when the user is typing and even find out which specific keys he is pressing.

When users type in VR, their eyes move in a certain way and blink less often. GAZEploit detects this and uses a machine learning program called a recurrent neural network (RNN) to analyze these eye patterns.

The researchers trained the RNN with data from 30 different people and ensured that the device could accurately identify typing sessions 98% of the time.

Guess the correct keystrokes

Once a typing session is identified, GAZEploit predicts keystrokes by analyzing rapid eye movements, called saccades, followed by pauses or fixations, when the eyes rest on a key. The attack maps these eye movements to the layout of a virtual keyboard and infers the letters or numbers being typed.

GAZEploit can accurately identify selected keys by calculating the stability of gaze during fixations. In their tests, the researchers reported an accuracy of 85.9% in predicting individual keystrokes and a near-perfect recall of 96.8% in recognizing typing activity.

Because the attack can be carried out remotely, attackers only need to have access to video footage of the avatar to analyze eye movements and infer what is being typed.

Remote access means that even in everyday situations such as virtual meetings, video calls or live streaming, personal information such as passwords or confidential messages can be compromised without the user’s knowledge.

How to Protect Yourself from Gazeploit

To protect themselves from potential attacks like GAZEploit, users should take several precautions. First, they should avoid entering sensitive information, such as passwords or personal data, using eye-tracking methods in virtual reality (VR) environments.

Instead, it is safer to use physical keyboards or other secure input methods. It is also crucial to keep software up to date, as Apple often releases security patches to address vulnerabilities.

Finally, you can further limit your exposure to risk by adjusting the privacy settings on VR/MR devices to limit or disable eye movements when you don’t need them.